If humanity could be caught thinking in real-time, what would it look like? Wikipedia is the closest thing we have to a globally interconnected brain — one that is packed full of knowledge from every corner of the globe — and is always in flux and rarely out of date.

“In the Wikipedia universe, reality cannot be pinned down with finality.”

Measuring behavior using open source tools

In this blog post we’re going to build a system that does real-time analysis on live changes that are being made across Wikipedia using open source tools.

The source code for this example can be found here.

Wikimedia Event Platform

The Wikimedia foundation is one of the first organizations in the world to create a modern real-time event-driven platform for public use based on Apache Kafka.

Wikimedia’s Modern Event Platform architecture

In the diagram above (courtesy of Wikimedia) they describe the various components of their event platform. Kafka is at the center of the architecture, and keeps data flowing as events are created by the various Wikimedia properties. Kafka acts as the stateful backbone of their system, allowing the platform to ingest extremely large volumes of events that are capturing the real-time behavior of users on one of the most trafficked websites on the planet.

Querying in Real-time with Apache Pinot

Apache Pinot was created, as was Kafka, at LinkedIn to power analytics for business metrics and user facing dashboards. Since then, it has evolved into the most performant and scalable analytics platform for high-throughput event-driven data. What makes Pinot so powerful is that it plugs right into the kind of system that Wikimedia has built on top of Kafka.

Pinot scales based on the same principles as Kafka when it comes to performance, which makes it a go-to solution for running SQL queries on events that are stored in Kafka topics. There’s no need to mess with custom serializers or to do heavy lifting to support long running applications that perform stream processing.

The Apache Pinot storage model.

Pinot is completely self-service for developers and operators, and provides a storage model that makes sense for modern event-driven platforms. Not only does it scale to the demands of high volume, it was built to scale to the organizational demands of needing to support fast real-time analytics on things happening right now.

Building the application using Spring Boot

The application framework I chose was Spring Boot, which provides a robust solution for reactive streams. Today, Spring continues to evolve, as the oldest possible production deployment of a Spring Boot application would be almost a decade old. To keep pace with the recent demands of modern event-driven applications built on Apache Kafka, the Spring team led the charge back in 2017, having now introduced a fully end-to-end reactive application framework that is integrated across the Spring ecosystem of libraries.

As a result of yet another Spring transformation — this time focused on high performance event-driven applications — emerging patterns for building reactive applications are continually surfacing. Having used Spring Boot for nearly a decade, I decided to put the new reactive goodies to work for analyzing real-time events published by the Wikimedia platform.

The example application’s source code that I discuss in this blog post can be found on GitHub. There you will find more specific instructions for setting up the end-to-end example as well as usage information.

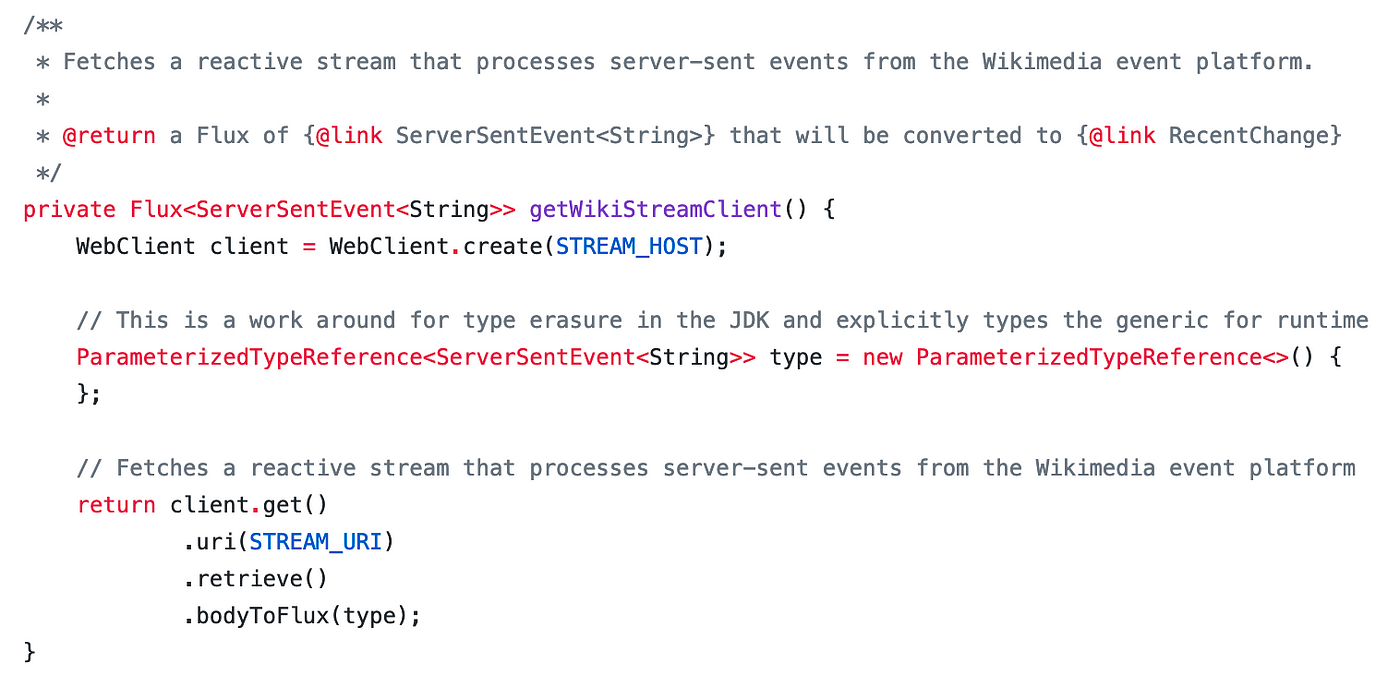

The first thing we’ll do is create a reactive stream that processes recent changes being reported by Wikimedia’s event platform.

Returns a reactive streams subscriber that processes server-sent events (SSE) from the Wikimedia recent change stream API. (RecentChangeProcessor.java)

Here, I create a stream client that will process each server-sent event that is emitted by the recent change API. Now I have a way to subscribe to the recent changes as they are happening. All changes across Wikipedia go through this pipe, which is at a rate of about 50 changes per second. The next thing I need to do is create a decoration job that will replicate the server-sent events into a Kafka topic that I control.

As a part of my research for putting together the example application discussed in this blog post, I relied on the help of friends. Xiang Fu, one of the co-authors of Apache Pinot, provided me with an insight that helped wrap my head around using Kafka for event-driven data analysis. Xiang made mention that the best way to query immutable events, which may number into the hundreds of millions, and potentially billions, is to not join tables.

Tables have always been a pain to deal with in relational databases, and that’s nothing new. Why we still have tables today is because SQL tends to be the most widely used language for querying data. While it’s probably not the best way to query raw event streams, it turns out to be the best option for business analysts or developers that need to quickly build reports on top of data sources that were originally shaped to fit in tables. This problem is famously known as an impedance mismatch, which simply means that the best model for querying data isn’t always the best model for storing data, which causes us humans to translate between models while sacrificing things like performance, availability, or consistency.

Xiang gave me a new way to think about this. Sometimes you’re not going to have all the data you need in an event stream, and joining real-time data represents a consistency trade off. Take for example the Wikimedia “recent change” API, which has a schema that describes what happened when a user executed a change on a Wikipedia article. These events are being streamed from a Kafka topic and published over HTTP using server-sent events (SSE).

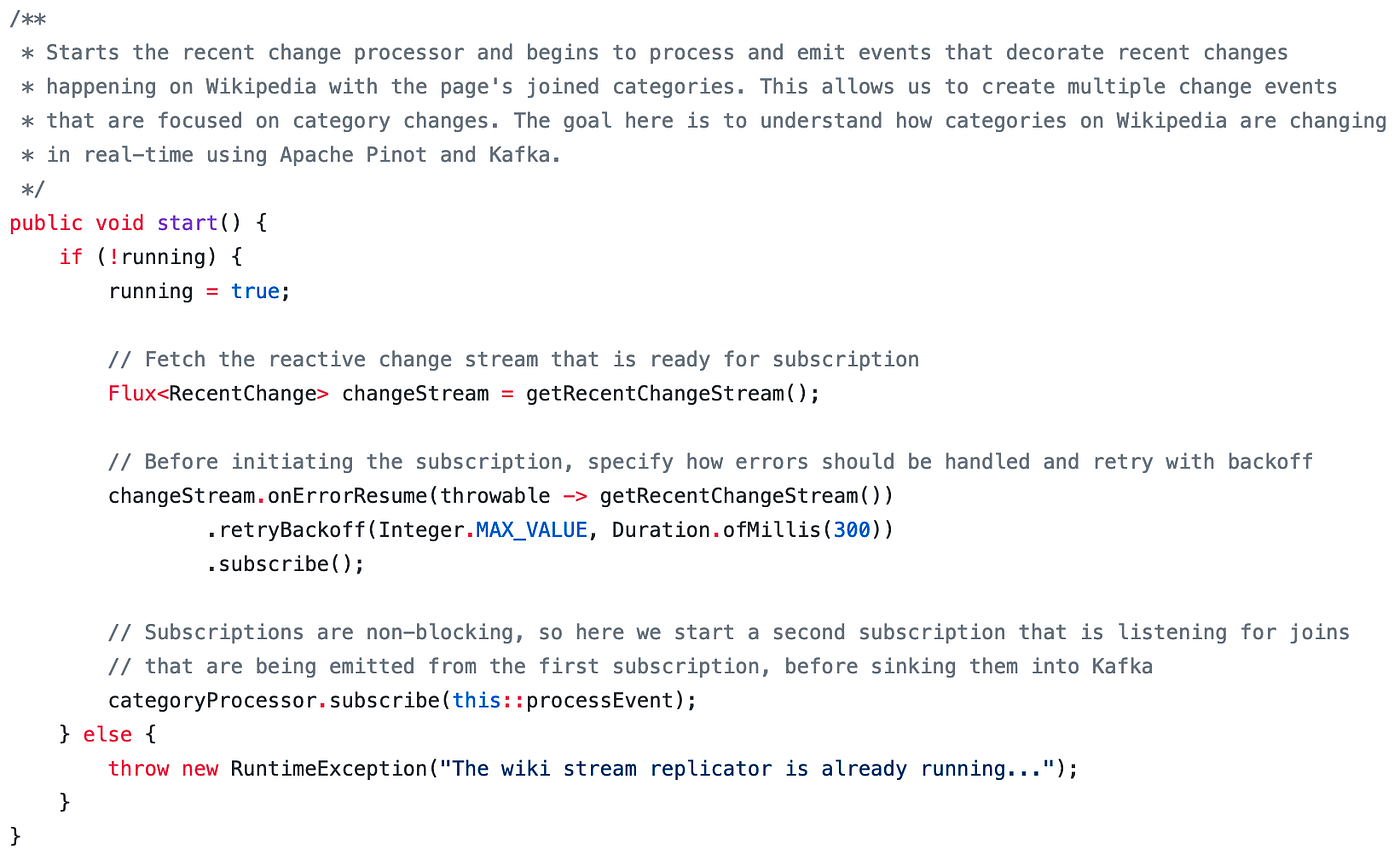

I can subscribe to these events, push them into a Kafka topic that I control, and then use that topic to run real-time queries as events arrive into Apache Pinot. The code below can be found in the RecentChangeProcessor.

Gets a reactive stream of recent Wikipedia changes and creates two subscriptions while specifying retries in case of errors from the Wikimedia API. (RecentChangeProcessor.java)

The schema of the recent change feed has a foreign key relationship to other data models on the Wikimedia platform, which in this case, is a changed article’s unique page ID. If I want to make a join in real-time, for example, to be able to query the categories on Wikipedia that are changing the most often, I need to do that using a join that relates a page to its categories. This join is costly because it requires consistency guarantees, which is difficult to do outside of a transactional OLTP database.

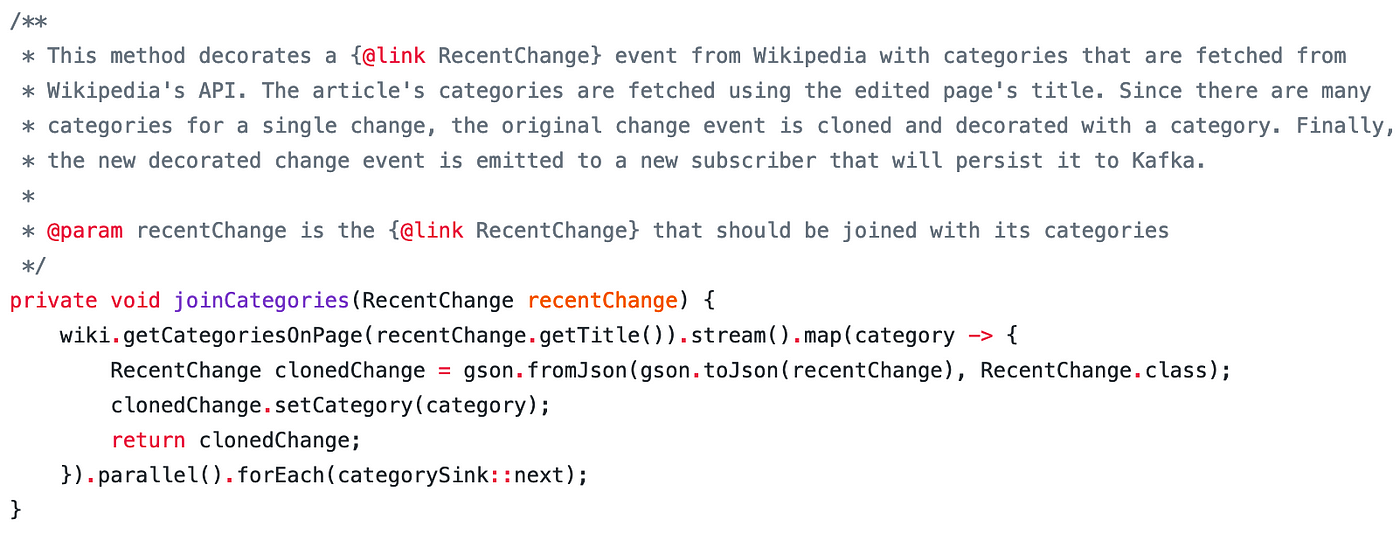

Decorates the recent change event with its page categories.

Where this problem becomes intractable to solve using an OLTP database, like MySQL, is when real-time analytical queries cause contention with simple lookups that are powering the Wikipedia website.

The solution here is to use immutable event logs without sacrificing performance due to resource contention. To solve this problem with Apache Pinot and Kafka, we’ll use a pattern called event decoration. Event decoration simply adds a property to an event, while forking it to a new Kafka topic that can be ingested by Pinot.

Gets a reactive stream of Wikipedia changes that serialize server-sent events (SSE) before joining each page change with its categories from a separate API. (RecentChangeProcessor.java)

Event decoration is required when you don’t want to sacrifice performance in the face of a join that would require a consistency check. Instead of doing a join between two tables at query execution time, we can decorate an event from a Kafka topic by forking it into a new topic that adds an additional query dimension (such as adding article categories on recent changes).

For every recent page change from Wikipedia, its categories are fetched (HTTP GET) from a separate API and joined into a new event. This creates multiple category events per page and sends them to a reactive stream subscriber. (RecentChangeProcessor.java)

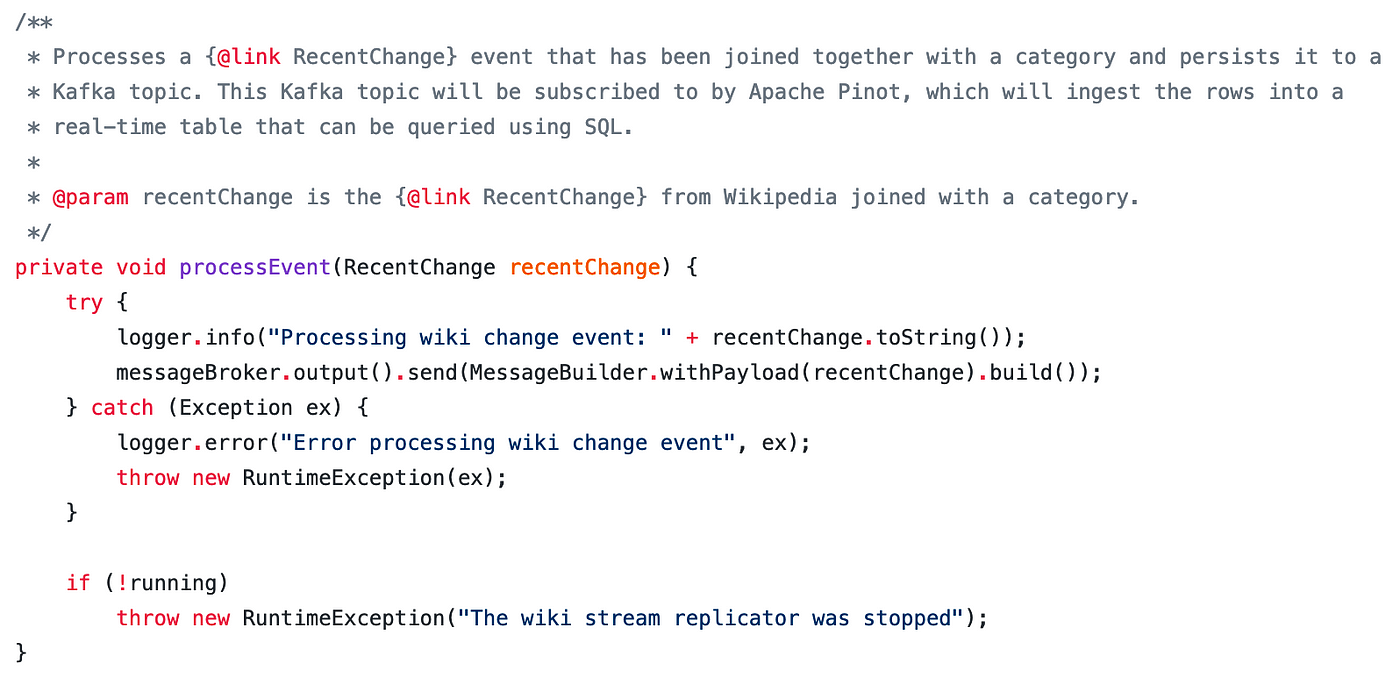

Now that I’ve reactively joined multiple categories to a single Wikipedia change using event decoration, I can finally persist the events I’ve generated by sending it to a topic in Apache Kafka.

Sends the category change event to a Kafka topic. (RecentChangeProcessor.java)

The next thing I will need to do is create a real-time table in Apache Pinot that will subscribe to these events and ingest each message as a row that we can query using SQL.

Querying in real-time with Apache Pinot

Real-time tables in Apache Pinot are designed to ingest high-throughput events from real-time data sources, such as Kafka. Pinot was designed to perform fast indexing and sharding on real-time data sources so that query performance can scale linearly on a per node basis. What this means is, no matter how many real-time tables you have, the query performance always increases as you add more nodes to a cluster.

When creating a real-time table, there are two things you need to prepare. First, you have to create a schema that describes the fields that you intend to query using SQL. Typically, these schemas are described as JSON, and you can create multiple tables that inherit the same underlying schema. The second thing you need to create is your table definition. The table definition describes what kind of table you want to create, for instance, for real-time or batch. In this case, we’re creating a real-time table, which requires a data source definition so that Pinot can ingest events from Kafka.

The table definition is also where we describe how Pinot should index the data it ingests from Kafka. Indexing is an important topic in Pinot, as with mostly any database, but it is especially important when we talk about scaling real-time performance. For example, text indexing is an important part of querying Wikipedia changes. We may want to create a query using SQL that returns multiple different categories using a partial text match. Pinot supports text indexing that makes performance extremely fast for queries that need arbitrary text search.

Creating a schema and table

To create a schema and table in Pinot for the real-time Wikipedia change events, I’ve generated two JSON files that can be found in the project’s source on GitHub. The best way to understand how to use Pinot is to look through the documentation, which stays up-to-date and provides various guides and learning resources. You can find the schema and table definition files here that are used in this example.

Since I’ve talked about schemas in a past blog post about analyzing GitHub changes in real-time, I’m only going to quickly go over the table definition file here, which connects to a Kafka topic for real-time Wikipedia changes that are joined by a page’s multiple categories.

Here we can see how the real-time table is connected to Kafka, with the topic name “wiki-recent-change”, which I have configured as the output sink in the reactive Spring Boot application.

Surfacing real-time news in Wikipedia

One of the reasons why I chose to create this application was to see if it was possible to surface real-time news from Wikipedia using Pinot. When looking at the granularity of change events at a high-level, without being able to query by category, it was determined that the noise was just too glaring to infer any kind of real-time news. After decorating the change events with their categories, it became much easier to see how the world, as described by Wikipedia, was changing in real-time.

In the screenshot above, you’ll find a word cloud of the most frequently changing categories that were measured over the course of a few hours. Wikipedia editors have a culture of creating categories based on article importance to a particular subject. For instance, what I found really compelling was that each of these categories could be queried to determine what was going on with deeper detail.

Here I am using Apache Superset to query results directly from Apache Pinot. In this query I am wondering why there were so many changes to the category, “Low-importance pulmonology articles”. The results came back with a collection of talk pages, which host discussions for Wikipedia users to debate changes to the page. The patterns started to emerge that it was indeed possible to get a view of both regional and world breaking news using Pinot.

In another query, I wanted to see why there were so many changes being made to high-importance China-related articles. It became clear that all these recent changes to important articles had some kind of relation to discussions on talk pages about the early timeline of the COVID-19 outbreak in China. To get a better view of what is happening inside a particular talk page, you can visit the page and see the comments that are being made. For example, take a look at Talk:COVID-19 Pandemic.

A piece of recent news that I was already aware of from the mainstream media was the COVID-19 related death of Roy Horn from Siegfried and Roy. I decided to run a SQL query to fetch all of the recent changes to articles related to the top-level category “Deaths”. The results did end up returning back top-edits related to “Siegfried and Roy”.

In addition to being able to explore real-time breaking news, you can use Apache Superset and Pinot to create real-time dashboards showing how changes are happening on Wikipedia over time.

For more information about running Apache Superset together with Pinot, check out this blog post.

Conclusion

In this blog post I showed you how to create a real-time change feed from Wikipedia that can be used to analyze and surface breaking news using Apache Pinot. We also walked through how to create a reactive event decoration job that forks Wikipedia change events into a new Apache Kafka topic that joins together changes with their article categories. This example application is exciting, and has many possibilities to be improved upon in the future.

Special thanks

A very special thanks to the Apache Pinot authors and committers that helped me create the example application in this blog post. My thanks goes out to Xiang Fu, Kishore Gopalakrishna, Alex Pucher, Neha Pawar, and Siddharth Teotia.