This article introduces you to a sample application that combines multiple microservices with a graph processing platform to rank communities of users on Twitter. We’re going to use a collection of popular tools as a part of this article’s sample application. The tools we’ll use, in the order of importance, will be:

Ranking Twitter Profiles

Let’s do an overview of the problem we will solve as a part of our sample application. The problem we’re going to solve is how to discover communities of influencers on Twitter using a set of seed profiles as inputs. To solve this problem without a background in machine learning or social network analytics might be a bit of a stretch, but we’re going to take a stab at it using a little bit of computer science history.

The PageRank algorithm, created by Google co-founder Larry Page, was first used by Google to rank website documents from analyzing the graph of backlinks between sites.

I dug up the original research paper on PageRank from Stanford for some inspiration. In the paper, the authors talk about the notion of approximating the "importance" of an academic publication by weighting the value of its citations.

The reason that PageRank is interesting is that there are many cases where simple citation counting does not correspond to our common sense notion of importance. For example, if a webpage has a link to the Yahoo home page, it may be just one link but it is a very important one. This page should be ranked higher than many pages with more links but from obscure places. PageRank is an attempt to see how good an approximation to "importance" can be obtained just from the link structure.

The PageRank Citation Ranking: Bringing Order to the Web

Now let’s take the same definition that is described in the paper and apply it to our problem of discovering important profiles on Twitter. Twitter users typically follow other users to track their updates as a part of their stream. We can use the same reasoning behind using PageRank on citations to approximate the "importance" of profiles on Twitter. This reasoning would tell us that it’s not the number of followers that make a profile important, it is measured by how important those followers are.

That’s exactly what we’re going to build in this article, and we’ll end up with something that looks like the following table.

| Rank | Photo | Name | Profile | Following | Followers | PageRank | Change |

|---|---|---|---|---|---|---|---|

| 1. | Paul Ford | @ftrain | 175 | 31948 | 7368.2417 | ||

| 2. | harper | @harper | 1024 | 32452 | 6754.455 | ||

| 3. | Bret Victor | @worrydream | 35 | 37658 | 6747.585 | ||

| 4. | Lincoln Stoll | @lstoll | 872 | 41067 | 5976.3555 | ||

| 5. | kate matsudaira | @katemats | 20465 | 25799 | 5916.3843 | ||

| 6. | rands | @rands | 741 | 35079 | 5888.145 | ||

| 7. | Alex Payne | @al3x | 405 | 41099 | 5547.4307 | ||

| 8. | Chris Wanstrath | @defunkt | 5645 | 45310 | 4787.9644 | ||

| 9. | SaraReyChipps | @SaraJChipps | 1007 | 29617 | 4271.676 | ||

| 10. | Leah Culver | @leahculver | 491 | 30723 | 3852.3728 |

The first thing we’re going to need to worry about when building this solution is how we’re going to calculate PageRank on potentially millions of users and links. To do this, we’re going to use something called a graph processing platform.

What is a graph processing platform?

A graph processing platform is an application architecture that provides a general-purpose job scheduling interface for analyzing graphs. The application we’ll build will make use of a graph processing platform to analyze and rank communities of users on Twitter. For this we’ll use Neo4j Mazerunner, an open source project that I started that connects Neo4j’s database server to Apache Spark.

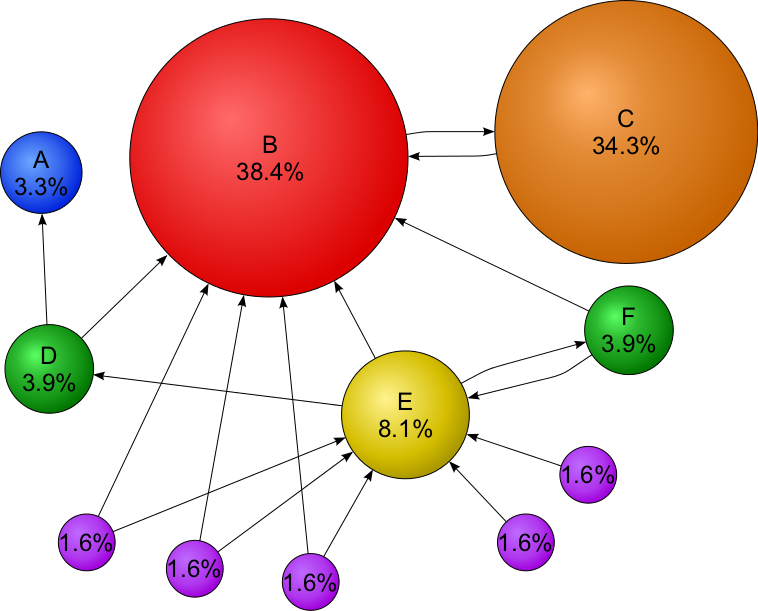

The diagram below illustrates a graph processing platform similar to Neo4j Mazerunner.

Submitting PageRank Jobs to GraphX

The graph processing platform I’ve described will provide us with a general purpose API for submitting PageRank jobs to Apache Spark’s GraphX module from Neo4j. The PageRank results from GraphX will be automatically applied back to Neo4j without any additional work to manually handle data loading. The workflow for this is extremely simple for our purposes. From a backend service we will only need to make a simple HTTP request to Neo4j to begin a PageRank job.

I’ve also taken care of making sure that the graph processing platform is easily deployable to a cloud provider using Docker containers. In a previous article, I describe how to use Docker Compose to run Mazerunner as a multi-container application. We’ll do the same for this sample application but extend the Docker Compose file to include additional Spring Boot applications that will become our backend microservices.

| By default, Docker Compose will orchestrate containers on a single virtual machine. If we were to build a truly fault tolerant and resilient cloud-based application, we’d need to be sure to scale our system to multiple virtual machines using a cloud platform. This is the subject of a later article. |

Now that we understand how we will use a graph processing platform, let’s talk about how to build a microservice architecture using Spring Boot and Spring Cloud to rank profiles on Twitter.

Building Microservices

I’ve talked a lot about microservices in past articles. When we talk about microservices we are talking about developing software in the context of continuous delivery. Microservices are not just smaller services that scale horizontally. When we talk about microservices, we are talking about being able to create applications that are the product of many teams delivering continuously in independent release cycles. Josh Long and I describe at length how to untangle the patterns of building and operating JVM-based microservices in O’Reilly’s Cloud Native Java.

In this sample, we’ll build 4 microservices, each as a Spring Boot application. If we were to build this architecture as microservices in an authentic scenario, each microservice would be owned and managed by a different team. This is an important differentiation in this new practice, as there is much confusion around what a microservice is and what it is not. A microservice is not just a distributed system of small services. The practice of building microservices should never be without the discipline of continuous delivery.

For the purposes of this article, we’ll focus on scenarios that help us gain experience and familiarity with building distributed systems that resemble a microservice architecture.

Overview

Now let’s do a quick overview of the concepts we’re going to cover as a part of this sample application. We will apply the same recipe from previous articles on similar topics for building microservices with Spring Boot and Spring Cloud. The key difference from my previous articles is that we are going to create a data service that does both batch processing tasks as well as exposing data as HTTP resources to API consumers.

System Architecture Diagram

The diagram below shows each component and microservice that we will create as a part of this sample application. Notice how we’re connecting the Spring Boot applications to the graph processing platform we looked at earlier. Also, notice the connections between the services, these connections define communication points between each service and what protocol is used.

The three applications that are colored in blue are stateless services. Stateless services will not attach a persistent backing service or need to worry about managing state locally. The application that is colored in green is the Twitter Crawler service. Components that are colored in green will typically have an attached backing service. These backing services are responsible for managing state locally, and will either persist state to disk or in-memory.