PageRank is an important concept in computer science and modern technology. It is important because it is the underlying algorithm that mostly dictates what more than 3 billion users who use the internet experience as they browse the world wide web.

How does PageRank work?

The first PageRank algorithm was developed by Larry Page and Sergey Brinn at Stanford in 1996. Sergey Brinn had the idea that pages on the world wide web could be ordered and ranked by analyzing the number of links that point to each page. This idea was the foundation of the imminent rise of Google as the world's most popular search engine, with now over 3.5 billion searches made by its users every day.

PageRank gives us a measure of popularity in an ever connected world of information. With an enormous degree of complexity increasing every day in the virtual space of information sharing, PageRank gives us a way to understand what is important to us as users.

The unfortunate bit of this is that PageRank itself is mostly unapproachable to anything but seasoned engineers and esteemed academics. That's why I want to make it easier for every developer around the world to make this algorithm the foundation of their innovative desires.

Distributing PageRank Jobs

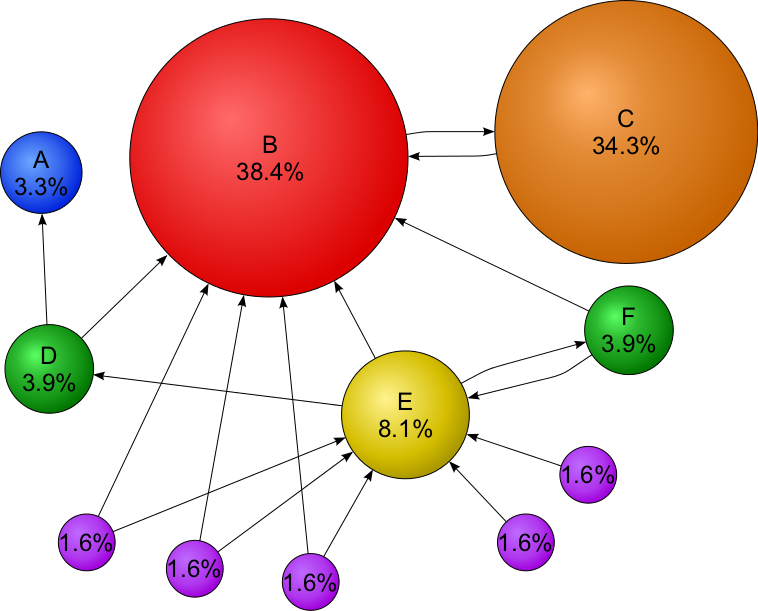

It should be no surprise to regular readers of this blog that I am all about the graph. Graphs are the best abstraction of data that we have today. The concept is brilliantly easy and intuitive. Nodes represent data points and are described by meta data. Relationships connect nodes together, also described by meta data, and they enrich the information of each node relative to one another.

Neo4j Mazerunner Project

As I have been building the open source project Neo4j Mazerunner to use Apache Spark GraphX and Neo4j for big scale graph analysis, I've come to understand the need for breaking down PageRank into categories. Something I call 'Categorical PageRank'.